This dashboard shows the prevalence of dis/misinformation on COVID-19

COVID-19 is not only a public health challenge—it’s a public information challenge. It’s proven difficult to wrangle even basic case and mortality data on the pandemic. And if you’ve spent time on the internet in the last several months, you’ve probably felt the challenge of identifying what information on the coronavirus is reliable—and what is not.

Dis/misinformation around COVID-19 is not only frustrating—it can be extremely dangerous. People have died after following unverified treatment suggestions, and conflicting information about the value of masks has led many people to believe they don’t need to wear them (fact check: you absolutely do).

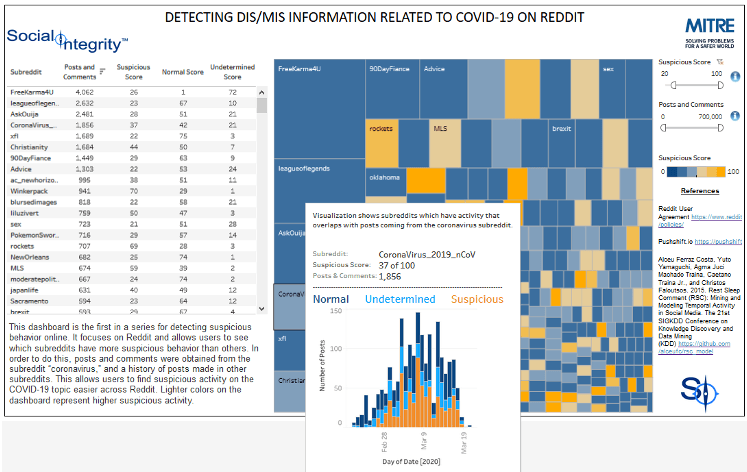

To give a sense of just how prevalent COVID-19 dis/misinformation is on the internet, our partners at the COVID-19 Healthcare Coalition (C19HCC)—a consortium of private sector and nonprofit organizations dedicated to improving the national pandemic response—decided to visualize it in Tableau. Through their Coronavirus Subreddit Dashboard, the C19HCC team shows where on Reddit faulty information about the virus is most likely to be. Reddit is just one social platform where dis/misinformation can spread, says Jennifer Mathieu, Chief Technologist for Social Analytics and Integrity at MITRE, which is part of C19HCC. But visualizing dis/misinformation on one platform can give users a sense of how widespread the issue is.

As a leading research organization for government agencies, MITRE has been analyzing dis/misinformation on social media for a few years, Mathieu says. MITRE believes that developing frameworks to detect and remove dis/misinformation from social platforms is a critical global challenge. COVID-19 has accelerated interest in the work on this topic and has brought in more funding to pursue research on how faulty information on the virus spreads through social channels.

The dashboard on subreddits is the first in a series that MITRE is planning around dis/misinformation and the virus, Mathieu says. To access the data, MITRE followed Reddit’s user agreement and used pushshift.io, a search engine that enables real-time tracking of posts and analytics on Reddit. The MITRE team pulled all posts to the coronavirus subreddit for a month (February 20 to March 19), and then also pulled a submission history for every user who also posted in the coronavirus subreddit to enable a view into other related subreddits.

Once the data was collected, the MITRE team ran it through an algorithm that’s designed to identify non-human behavior. This “rest, sleep, comment” algorithm, Mathieu says, essentially looks into the timing of a user's posts to determine the likelihood that the user is a bot or a computer program, not a human poster. If a user is commenting during times when they likely should be resting or sleeping, it’s more likely they’re non-human, and it’s more likely that non-human users are amplifying dis/misinformation, Mathieu says.

It’s worth noting, though, that this algorithm does not apply a value judgment to comments made by humans. “We don’t address if something is accurate or not—that’s a hard technical challenge,” Mathieu says. The difficulty stems, in part, from how the idea of truth is applied against free speech. Mathieu gives the example of a mother who might be passionately against vaccination. “If that’s a sincere belief you have and you put it online, you’re representing yourself—that’s free speech,” she says. “It’s tricky to have an algorithm say whether that’s true or not.”

The dashboard doesn’t fully capture the veracity of all the statements around COVID-19 on Reddit, but it does give users a sense of how much of the content reflects the discussion among other humans. Looking at the dashboard, you can’t drill down and see which specific Reddit user accounts are flagged by the algorithm as connected to the spread of dis/misinformation. But you can see that every subreddit analyzed has been assigned a color based on the percentage of potential bots that could be in each subreddit.

The dashboard is meant to be a window into just how prevalent bots and programs that spread dis/misinformation are on social platforms. “We understand that dis/misinformation is an enormous problem during COVID-19, but we need a way to communicate that out to a broader audience,” Mathieu says. MITRE’s hope is that getting more people engaged in recognizing and flagging dis/misinformation on social platforms could help limit it’s spread.

“There’s a fair amount of consensus around what could be done to make the situation better,” Mathieu says. “It all revolves around detecting accounts that are amplifying dis/misinformation, addressing them, and sharing data across researchers and various groups so we can work with the whole social media ecosystem to advocate for stronger frameworks around stopping the spread.”

Autres sujets pertinents

Abonnez-vous à notre blog

Recevez toute l'actualité de Tableau.