zulily’s top 10 tips for self-service analytics with Google BigQuery and Tableau

Editor’s note: Today’s post comes from Sasha Bartashnik, zulily’s Marketing Analytics Manager. zulily is a $1.6 billion fast-growing e-commerce company that launches 100+ new sales a day to tantalize women with the thrill of new and unique finds. This is part two of the series—see part one here.

In part one of the series, we shared why we created a self-service marketing analytics platform with Tableau and Google BigQuery. In this post, we will provide some tips and tricks for integrating BigQuery with Tableau. Below are some best practices we learned, which you may want to try when building your own self-serve analytics platform with BigQuery and Tableau.

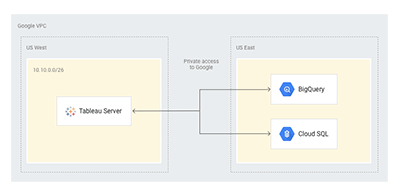

1. Reduce latency by using Tableau Server on Google Compute Engine

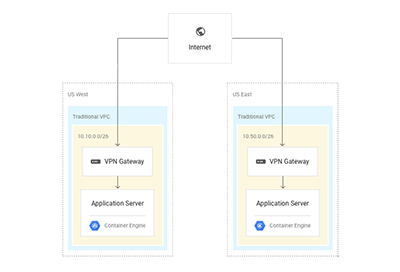

In a traditional model, regions are separate VPCs and you have to cross the Internet to reach them. Running your Tableau server on Compute Engine leverages the same network Google uses for applications like Search, YouTube, and Gmail. That means your Tableau Server talks to BigQuery over Google’s private backbone without going to the Internet, and without any additional setup.

Traditional VPC

Google Cloud

Compute Engine also offers helpful features like live migration which can greatly increase Tableau Server’s uptime since your Tableau Server virtual machine keeps running even when a host event occurs. Tableau administrators can also take advantage of custom instance sizing, which means you can right-size your Tableau deployments without having to over-provision to a “one size fits all” instance size.

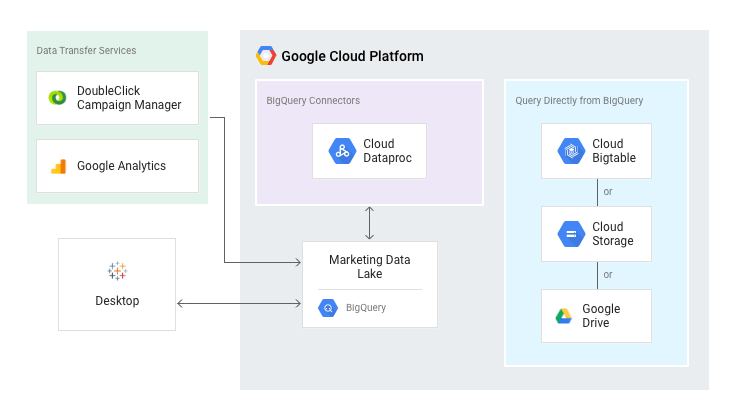

2. Use Federated Sources and point Tableau at BigQuery

For data within Google Cloud, you should take advantage of BigQuery’s ability to query external data sources and treat BigQuery as your data lake. For example, although Tableau has a direct connector to Google Analytics and Google Sheets which is excellent for ad hoc exploration, in many scenarios, you may want to combine data on ads served, with data around website interactions like clicks and hovers. In such scenarios using BigQuery as a consolidated data repository can reduce the amount of data that needs to be pushed over the network and into Tableau for analysis.

Tableau users often find this model particularly useful when they need to produce manually updated Google Sheets data in conjunction with other Google BigQuery data in reports. In scenarios, where you want to combine Google BigQuery data with data from non-Google data sources for ad hoc exploration, leverage Tableau’s cross-database joins feature.

3. Create tables in BigQuery that match your dashboard requirements

In BigQuery, data storage is cheap! Take advantage of this by creating additional tables that can be queried by your dashboard without having to process the data. In other words, if you know what data will go into your dashboard, materialize those `JOIN`s and pre-aggregate anything that your business users won’t need to drill into. You’ll also want to unnest any nested and repeated fields that you might otherwise have trouble getting into Tableau’s flat data reporting structure.

For ongoing updates of these tables, Google Apps Script has access to the BigQuery API and can be a quick and easy way to schedule BigQuery queries on an automated schedule.

Keep in mind that in addition to properly structuring the underlying data, the design of the dashboard itself can also play a major role in performance. This whitepaper offers some excellent tips on how you can use clever design principles to create workbooks that are visual, interactive, fast and easy to understand.

4. Take advantage of Cloud Datalab to structure data for Tableau

When you need to do the data preparation described in the previous step, make use of Google Cloud Datalab. This is a powerful interactive tool based on Jupyter notebooks that can be used to explore, analyze, transform data and build machine learning models. It connects to BigQuery seamlessly and can easily be stopped and started when you need to use it.

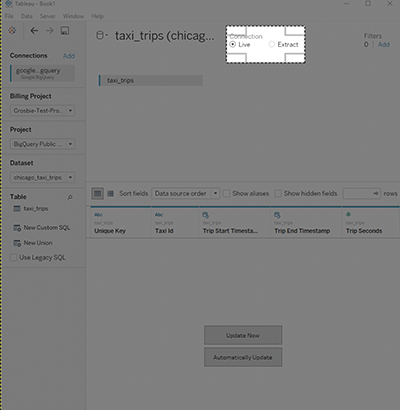

5. Process large datasets with a live connection in BigQuery

When connecting to BigQuery from Tableau, you will want to take advantage of BigQuery’s ability to process large datasets and only bring results across the network. You should default into setting your Tableau connection against BigQuery as “Live” unless you have a specific reason to extract the data.

For an overview of what some of those reasons might be, read this excellent post by Tableau Zen Master, Jonathan Drummey where he outlines several use cases where extracts provide benefit . There is one important point to make about data extracts – they are not a replacement for data that might be in Google BigQuery, rather a complement. While they can be used to collect and aggregate data over time (i.e. incrementally add data according to a periodic cycle) this should be used as a tactical, rather than long term, solution. Incremental updates do not support update or delete actions to records that have already been processed – changing these requires a full reload of the extract.

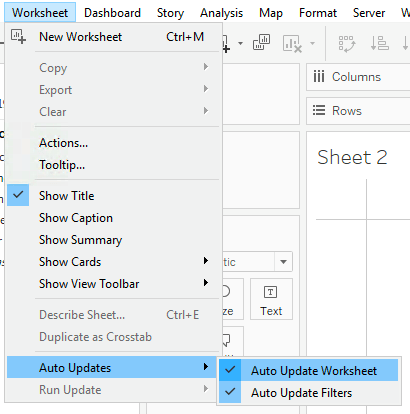

With this live connection, you may also want to consider turning off automatic updates. When building a dashboard, as you place fields on a shelf, Tableau generates the view by automatically querying the data source. If you are creating a dense data view, the queries might be time consuming and significantly degrade system performance. In this case, you can instruct Tableau to turn off queries while you build the view. You can then turn queries back on when you are ready to see the result.

6. Take advantage of BigQuery’s caching when analyzing data in Tableau

Often, before we’re ready to build a dashboard, we use Tableau to do interactive analysis and “play” with the data in order to develop an understanding of it before trying to make it consumable by business users. During this phase, you’ll want to take advantage of BigQuery’s caching.

BigQuery writes all query results to a table which is either explicitly identified by the user (a destination table) or is a temporary, cached results table. Once data is read, it will be reused for about 24 hours. Take advantage of this in your analysis phase by first running a very wide scoped query that contains all the data you plan to analyze. For example, let’s say you plan to run an analysis across three three types of records: a, b, and c. Instead of running three separate queries for WHERE col1 = ‘a’, WHERE col1 = ‘b’ , and WHERE col1=’c’, simply start your analysis process by running a query against your source table and output all the records into a cached destination table.

Example:

SELECT col1 FROM source WHERE col1=’a’ OR col =’b’ OR col1=’c’

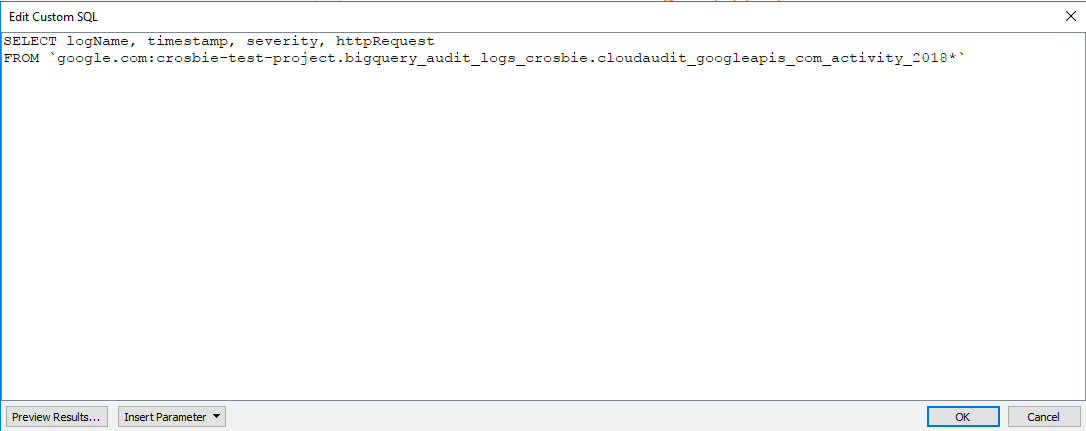

7. Use Tableau’s Custom SQL feature to take advantage of partitions

BigQuery supports partitioning tables by date. However, at time of writing, Tableau does not recognize the pseudo column necessary for properly filtering by the date-based timestamp. To get around this, when working with partitioned tables in Tableau, you should use Tableau’s Custom SQL option to specify the date range of interest, in order to avoid scanning the entire table.

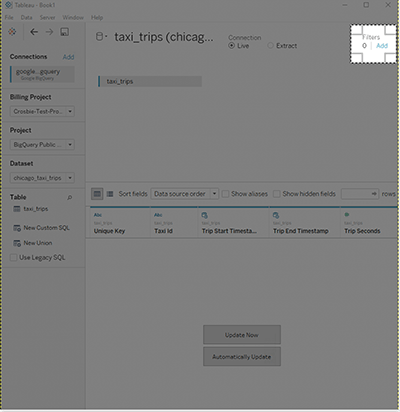

8. Limit data transfer with predicate pushdown

When building your Tableau dashboard, you should add filters before adding fields to the report. As long as your have “live connection” set to On, this will push the filter (`WHERE` clause) to BigQuery. While this will not change the amount of data that gets scanned in BigQuery, it will greatly reduce the data that needs to come back over the network.

If you know that a particular workbook will always have certain filters applied, you can also set those at the data source level. Here’s a good post from the Tableau community that explains the different ways to filter and restrict your data.

9. Build Tableau extracts outside of Tableau

While the majority of tasks are better accomplished with Live Connections to Google BigQuery, there may be rare situations which call for extracting the data into a Tableau Data Extract (TDE).

Since the Tableau to BigQuery connector is optimized for Live Connections, you may experience slower than usual extract times from within Tableau for larger BigQuery datasets.To vastly improve this, you should setup a process that exports the BigQuery data into something like Google Cloud Storage and then use the Tableau Extract API to write a custom extract from the flat files in Google Cloud Storage.

For an overview of reasons when you may need to use an extract over a live connection, read this post by Tableau customer champion and evangelist Jonathan Drummey. There is one important point to make about data extracts – they are not a replacement for data that might be in Google BigQuery. While they can be used to collect and aggregate data over time this should be used as a tactical, rather than long term, solution. Incremental updates do not support update or delete actions to records that have already been processed. Data changes other than an append, would require a full reload of the extract.

10. Use ODBC if you require service accounts (Tableau server connection)

In general, we recommend that you use Tableau’s native connector with Google BigQuery for best results. The named connector is optimized for the database. However in certain scenarios you may want to run under a generic Google Service Account rather than as an individual user.

Google Cloud Service Accounts are an excellent way to provide application to application security. Authentication between Tableau Server and Google BigQuery is a common use case for this. However, at the time of this writing, service account authentication is not supported in the Tableau BigQuery connector.

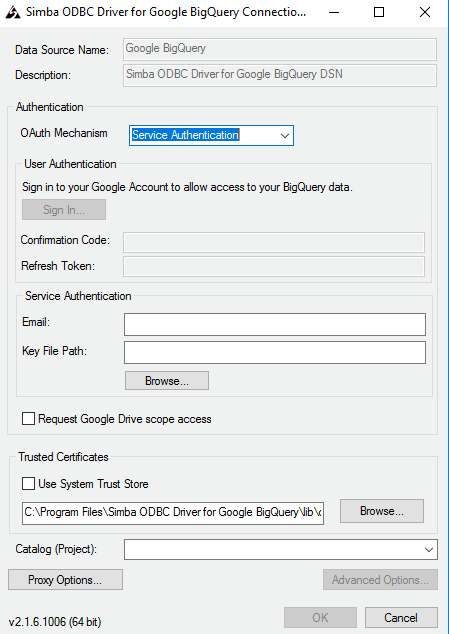

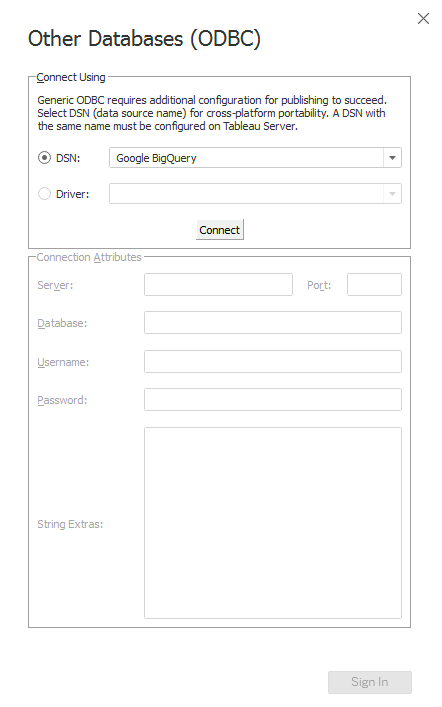

However, Tableau does offer the ability to connect via generic ODBC drivers and you can use the Simba Drivers for Google BigQuery to connect to BigQuery from Tableau using service accounts.

Simply install the ODBC driver on the Tableau server or workstation of the Tableau desktop client, configure the DNS with the service account information, and use “Other Database (ODBC) in Tableau”.

With that said, a couple of words of caution. Know that Tableau provides limited customer support for connections using Other Databases (ODBC). ODBC is not supported on Tableau Online either.

Conclusion

I hope that this post shares the value of what we did—and what you can do—with Tableau and BigQuery in a self-service analytics model. I also hope you’ve found some tips that you can use, whether you are just getting started with Tableau and BigQuery or have already started using this combination on your own journey to self-service analytics.

To learn more about zulily—who we are, what we do, and how to join our team— check out our website or read our tech@zulily blog.

Related Stories

Subscribe to our blog

Get the latest Tableau updates in your inbox.