Scraping the IMDb for Use in Tableau

Dr. Konstantin Greger is Associate Sales Consultant at Tableau. This is an abridged version of Konstantin’s original blog post from his personal website. Originally written for attendees of the Tableau Cinema Tour, it might be equally helpful for people entering IronViz "Silver Screen"; hence we are re-publishing it here.

I decided to produce this write-up of how to extract the data from the Internet Movie Database (IMDb), as copyright reasons make it impossible to provide the ready-made data. But with this walk-through, everybody should be able to build their own dataset!

The Tools

- The collection of data and main extraction of the usable information is happening using a number of Python scripts. For the following to work, we assume Python 2.7 is on your machine – I haven’t tested the scripts on Python 3.

- You’ll need a tool for extracting .gz files. On Linux machines I recommend gzip or gunzip, for Windows 7zip is a good free option – commercial WinZip can also handle them.

- The main script writes the output data directly into a PostgreSQL database, so you will need access to that. The default setup of the scripts assumes a local installation, but a remote database will also work. I developed and tested the scripts using PostgreSQL 9.5.

- You’ll also need a text editor. Personally, I like Notepad++, but any given editor should be fine. Alternatively, you could resort to a true Python IDE such as Spyder. For having a look into the rather massive source data files I also recommend Sublime.

- It probably won’t be necessary for simply using the scripts, but since they make heavy use of regular expressions I used regex101.com a lot. (Hint: Don’t forget to set the “flavor” on the left to “Python”…)

- And of course, we need Tableau Desktop, to visualize the data! In my case I save the data in a PostgreSQL database; to connect to this, I am using the Professional Edition of Tableau Desktop. Users of the free Public Edition will need to export their data as a CSV file.

The Data Source

The data stems from what is most likely the most famous and complete source for anything and everything about movies, cinema, and TV: the Internet Movie Database (IMDb). According to their own statistics, the IMDb currently contains data on 4,431,127 productions and 8,047,620 people. Of course, one could just start to crawl and download all that data, but this would not only be against their terms of usage but would also probably be awfully slow. Since the IMDb changes so quickly, inconsistent data would probably be the outcome.

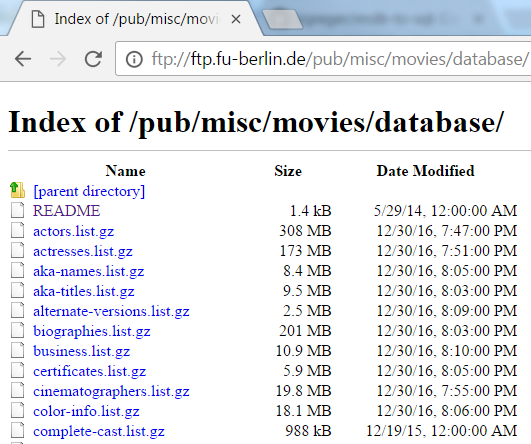

Therefore it’s a lot easier to just download the complete IMDb in one go! Unfortunately, the data model underlying the IMDb is not just available for download. That would be too easy and also defy the existence of this blog post. Well hidden under the headline “Alternative Interfaces” it is possible to download “Plain Text Data Files”. Since I’m in Germany, I used the mirror at the FU Berlin – thanks to all the people providing IMDb mirror sites!

If you (just as myself) think there should be an IMDb API, please feel free to participate in this survey!

The Workflow

Introduction

The following might be a bit technical. That said, it’s not necessary to understand every single step in detail to be able to use the script and get the data.

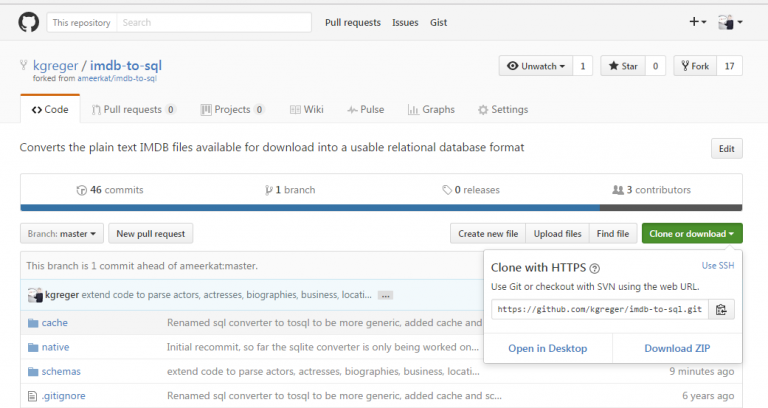

I didn’t start from scratch but built upon the great work done by Ameer Ayoub which is available on his GitHub repository for download. The script reads the source files, parses them into a more usable format, and then saves the results into a database. Sounds straightforward enough, but isn’t as it turned out. Ameer did a great job in making his script very modular and well commented, while at the same time keeping it flexible and extensible.

I forked and extended his repository. While Ameer had mostly the reusability in mind when writing his code, I need to extract the data for a work project. While I extended the code with topics like ratings, business data, filming locations, and biographical data, I did all of this exclusively for PostgreSQL as a backend. So if anyone is planning to use it with MySQL or SQLite in the background please feel free to extend my code accordingly. PostgreSQL works out-of-the-box.

Step 0: Preparations

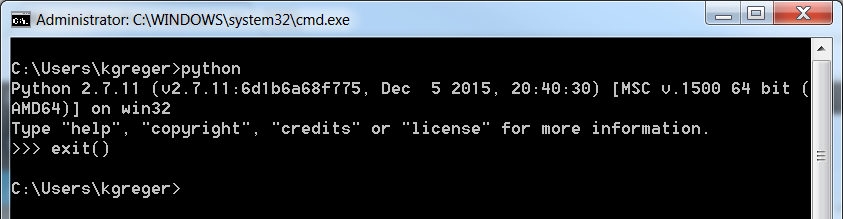

All the tools mentioned above should be installed and tested. The Python install can be tested by entering python on a command line. The result should look like this:

The easiest way out of the Python command line interface (indicated by the >>>) is by entering exit(). All the examples shown in this blog post were run on a Windows machine, but the code is platform independent so it should work on Mac OS and Linux.

Step 1: Download the Script

Ameer’s script with my changes and extensions is available on my GitHub repository and can be either forked, cloned, or downloaded as a ZIP archive.

Step 2: Download the Source Data

The IMDb data can be easily downloaded from the above-mentioned FTP server at FU Berlin:

They are zipped text files with the extension .list. The script in its current version uses only the following files:

- actors.list.gz

- actresses.list.gz

- biographies.list.gz

- business.list.gz

- locations.list.gz

- movies.list.gz

- ratings.list.gz

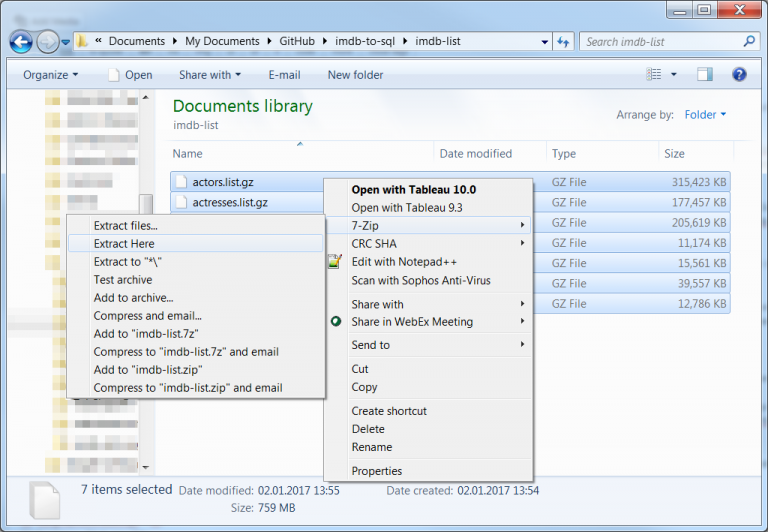

To make the execution of the script as painless as possible (read: without having to make too many changes) I recommend saving those files into a subfolder of the scripts’ location. Ideally, it should be called imdb-list. (Hint for GitHub users: exclude this sub folder in your .gitignore – otherwise all the data will be synced into your repository…) The .gz files can be deleted after unpacking them:

Also, the text files’ encoding needs to be changed to UTF-8 from ISO-8859-1 (“Latin-1”). This can be done very easily in a text editor. In Sublime using File > Save with Encoding > UTF-8; in Notepad++ using Encoding > Convert to UTF-8 and then File > Save.

Step 3: Tailoring the Script

This is probably the hardest bit. But you should be alright if you followed my recommendations earlier and want to keep everything on the default settings. There might be cases where the script needs to be run multiple times before everything works as expected, but nothing can go catastrophically wrong, so don’t fret.

What I so far referred to as “the script” is actually a collection of multiple Python source files (indicated by the .py file extension) and SQL code (indicated by the extension .sql in sub folder schemas). Both the code and the SQL queries are commented sufficiently to understand quickly what’s going on. This shouldn’t even be necessary for most readers, unless you really want to understand how they work.

- The most central component is the tosql.py file. It takes care of both parsing of the input files using regular expressions and saving the output data into the database.

- The file settings.py contains, as you might suspect from the file name, all the main settings to the script.

- Furthermore the script needs to call the files postgres.drop.sql and postgres.use_dict.sql from sub folder schemas to initialize the database.

- The files postgres.index.sql and postgres.postprocess.sql will be run manually in the end.

A good starting point is to take care of the basic settings in settings.py. By default it looks like this:

The parts needing our attention are marked above:

- Line 10 holds the name of the database we create in PostgreSQL – by default that’s imdb_data.

- Line 13 holds the database server’s address – by default that’s 127.0.0.1 for a local server.

- Lines 14 and 15 hold the login credentials for the database.

- Line 16 defines whether an existing database should be wiped when the script starts. That’s probably a good idea unless you’re doing some testing with parts of the script. Hence the default here is True.

- Line 20 holds the path where the script will look for the input files – by default that’s the imdb-list sub folder as mentioned above.

It might also be interesting to set the flag query_debug to True if you’re interested in a very detailed output of what the script is doing at each given time. Note: this has very heavy implications on the performance, therefore this setting should only be active during testing.

The options progress_count and commit_count define how often the script should print status information or write the data from memory to the database, respectively. With the latter a setting of -1 means that the data will only be persisted to disk in the very end of each file. This increases the memory footprint, and I advise against it – unless you have a good reason to do so.

Lastly, the parameter proc_all can assure that all input files will be processed. In case it’s set to False lines 497-504 in the main script tosql.py will define which files will be processed. This is also only for testing purposes:

Step 4: Prepare the Database

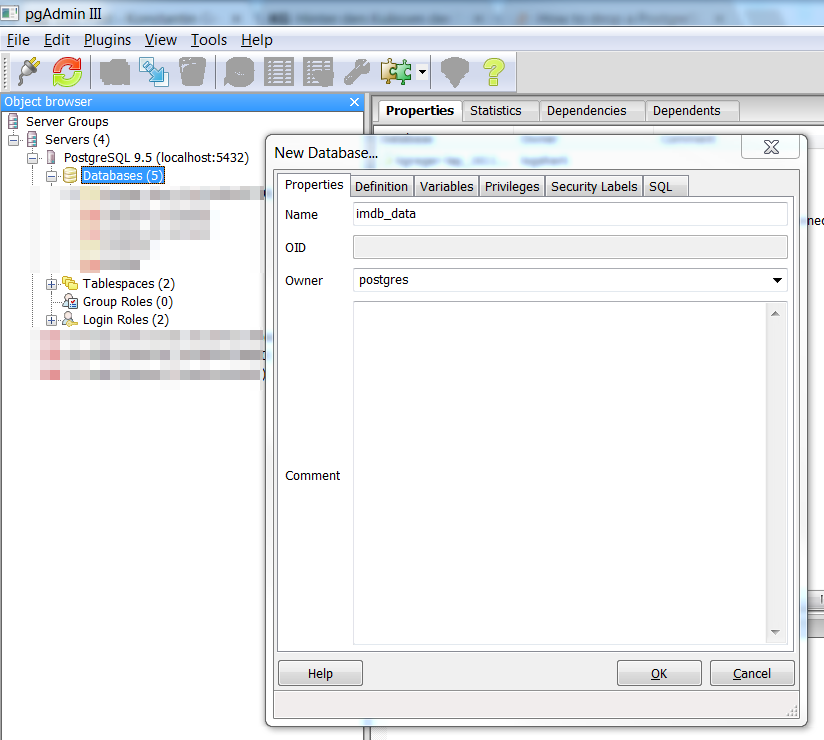

The PostgreSQL database our output data will be written to needs to be created before running the script. The easiest way to do that is a graphical user interface such as pgAdmin, which is either installed together with PostgreSQL or can be downloaded and installed manually from this website.

It can also be done on the command line. In this example, I opted for imdb_data as the database name, which means I won’t have to change it in the settings script (see above).

Another important aspect is the fact that the owner of this new database is the user referenced in line 14 of file settings.py – in my case postgres.

The resulting SQL statement:

Step 5: Run the Script

When all preparations are done we run the script! The main script is started by entering python tosql.py on the command line. We need to be in the correct folder, otherwise the path needs to be given as well.

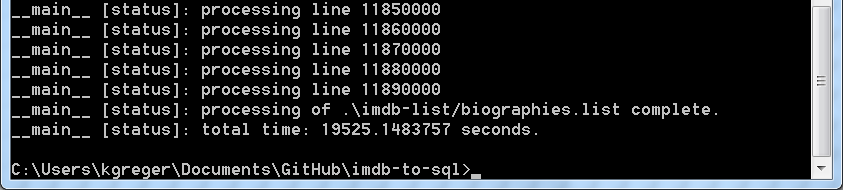

The code itself is neither very efficient nor highly performant, therefore the execution takes quite a while – about 5.5 hours on my mid-class laptop machine. But we all know the old software development adage: If code optimization takes longer than the time saved by it, it’s not really worth it. Ideally, this script will only run once, so…

During run time a few messages will appear on your screen:

You can easily ignore these. The script just came across a few data sets the regular expressions couldn’t handle properly – mostly because of inconsistent use of quotation marks in the text files. These data sets will be missing in the output data, but due to their very small number, I decided against looking into the regex issue any deeper.

The script ends with a print out of the total run time in seconds:

Step 6: Create Indices and Post-process Data

Indices help us with the performance of the IMDb data, which are waiting for us in the PostgreSQL database after the main script is done. We use the script index.py by entering python index.py on the command line to create the indices:

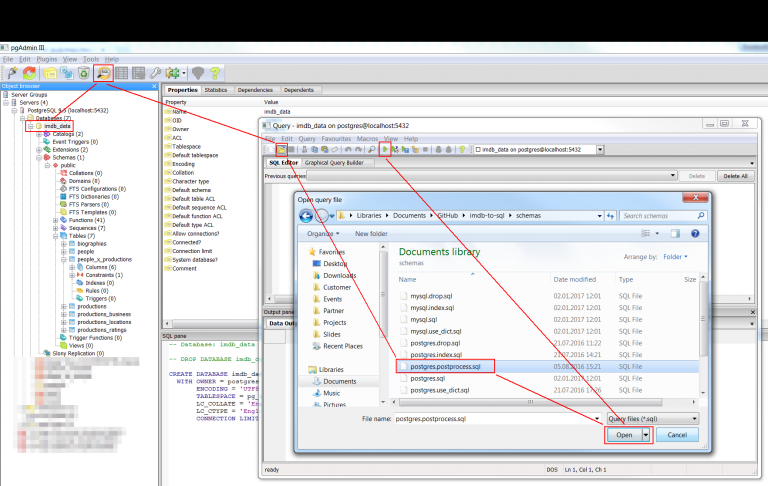

I didn’t create another Python script for the remaining changes, so we need to do those directly inside the PostgreSQL database, ideally using a graphical user interface like pgAdmin. The steps are contained in the file postgres.postprocess.sql in the schemas sub folder. It can be executed in one go:

After connection to the correct database (here imdb_data) we just need to click the Query button, select the Open button and the correct file (here postgres.postprocess.sql) and finally click on the Run button with the green play symbol.

If you prefer using the command line, you can issue the command using psql -U postgres -dimdb_data -a -f postgres.postprocess.sql

In order for this to work you should have the path to your PostgreSQL binaries (C:\Program Files\PostgreSQL\VERSION\bin) in your PATH variable. The sections in italics might need to be set accordingly.

If you’re planning to work with the IMDb raw data you should stop here and get started with your analyses!

Step 7: Done!

Congratulations, you now have the complete IMDb (well, the parts we downloaded…) in your PostgreSQL database, all indexed and nicely prepared for you to analyze away! Even though I don’t recommend it for performance reasons, this is how you can rebuild the complete data model in Tableau (For this you need Tableau Desktop Professional Edition):

The script postgres.postprocess.sql:

- Joins the data from table biographies to the people data in table people.

- Joins the production cost and box office results from table business to the productions in table productions.

I also added a few filters to iron out some very obvious data errors:

- people aged less than 0 or more than 120 years during production

- productions that cost less than 0 or more than 350 million US dollars

- had box office returns of less than 0 or more than 2.8 billion US dollars in total or more than 250 million US dollars on opening weekend. These values might have to be corrected at some point, but currently they match the real situation.

- Removed all productions that are neither a feature film nor a TV series, like e.g. computer games, DVDs and things like that.

Some of the field names in the database might still be a bit too cryptic for some people. For them I created a Tableau Data Source Tableau Kino Tour.tds (right-click > Save target as...). It uses exclusively the table productions – that’s what we used when we demoed this data during the Tableau Cinema Tour.

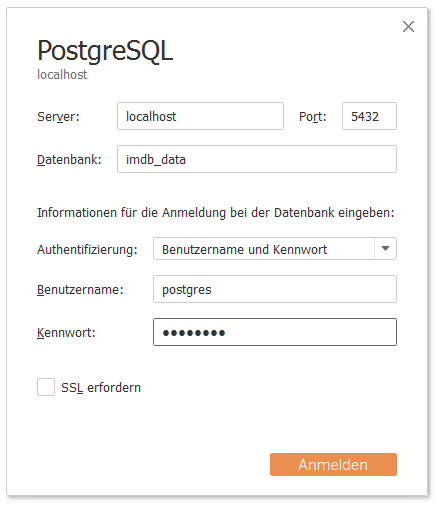

When loading up the .tds file, Tableau is going to ask for the PostgreSQL credentials (here postgres/postgres). If the PostgreSQL database is running on a remote system the connection parameters also need to be changed using Edit connection.

In order to get the highest possible performance out of our IMDb data we finally create a Tableau Extract (.tde file). The easiest way is to right-click on the data source “Tableau Kino Tour” on the top-left > Extract... and then click the button “Extract” in the newly opened dialog window.

Relaterade berättelser

Subscribe to our blog

Få de senaste Tableau-uppdateringarna i din inbox.