Tableau Agent: Building the Future of AI-Driven Analytics

Editor's Note: originally published on on Salesforce Engineering Blog as part of the Engineering Q&A series. Read the Q&A with John He, Tableau Vice President of Software Engineering, who leads the development of Tableau Agent, formerly Einstein Copilot for Tableau.

What complexities were involved in building the AI infrastructure for Tableau Agent?

One of the primary challenges was prioritizing the development of a benchmarking system. This system collects data from various sources and plays a crucial role in evaluating the effectiveness of question and answer interactions. To optimize the data processing workflow, the team invested significant resources in developing telemetry and data pipelines.

Enhancing the intelligence capabilities of Tableau Agent was another key focus. This involved:

- Integrating both standard and customized models into the production environment. The Salesforce Einstein Trust Layer was utilized to protect the request and response. To ensure the suitability of these models, the team relied on the benchmarking system to evaluate model performance and make informed decisions.

- Grounding with authorized customer data. We intelligently sent minimal data to LLMs while maintaining the top-level security. The metadata are initially used by field identification, and the algorithm may further look into the value of dimensions so as it could make the context more precisely

- Generating code from enriched context, with Tableau best practices in mind. The code included the calculation or notional spec and were converted to visualization by the Viz platform. The team aimed to ensure that customers could benefit from the best available options and that the intelligence provided was transparent. This meant avoiding a one-size-fits-all approach and instead tailoring the models to suit each customer’s individual needs.

What obstacles does your team face in terms of accuracy and efficiency while developing Tableau Agent?

First, we face the challenge of accurately interpreting user questions and translating them into the appropriate code in Tableau’s programming languages include VizQL and Notional Spec. While we have a working model for this, we are continuously striving to improve its accuracy and reduce any hallucinations in the translation.

Additionally, we are tackling the problem of knowledge generation in analytics. This involves enhancing prerequisite knowledge, which includes addressing data quality, reliability, accuracy, data source updates, data coverage, and potential limitations or biases. We also focus on generating descriptive knowledge that provides accurate and informative summaries specific to different domains.

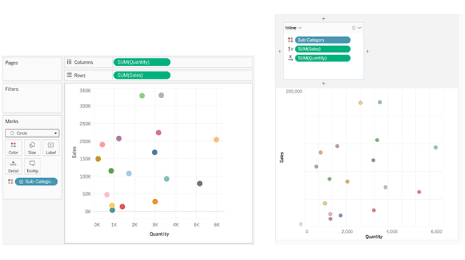

Lastly, we are working on automating visualization generation based on user data and queries, like Sora in OpenAI, which can generate video with a few prompts. To achieve this, we are developing a supervised fine-tune LLM model that can efficiently transform data into multi-modal answerers with visualization. This significantly reduces the time and effort required for analysis. The resulting visualizations can be rendered in Tableau or presented in Tableau Agent's chat box, providing users with quick and insightful visual representations of their data.

What were the key technical challenges for improving the AI and core capabilities of Tableau Agent?

One of the primary challenges was finding more data to train and improve the intelligence of the system. To address this, the team shifted its focus to:

- Synthesized data. We are continuously refining our analytical data generation algorithms to enhance the quality of the model and streamline the process.

- Tableau Public. A community-driven platform that hosts millions of reports, visualizations and data sources. This platform attracts a large user base and collects a diverse range of data. By providing free access to the technology, the Tableau community can also contribute their data, further enriching the dataset.

Additionally, the team needed to balance Tableau Agent's accuracy, speed and creativity, which required careful tuning. The team developed a novel analytical specification that enhances the speed of interpreting user intent for Tableau system capabilities while maintaining accuracy. To balance accuracy and creativity in Tableau Agent, the team leveraged the temperature concept from LLMs. By adjusting the temperature, which represents the level of creativity in AI responses, they can control the accuracy of the answers provided. This ensures that the responses are generated from factual information and meet user expectations. Keeping the temperature lower reduces the risk of hallucination and provides users with reliable and accurate insights.

Continuous improvement was another crucial aspect the team prioritized. To enhance the user experience of Tableau Agent, an experimental platform called Zeus was utilized. This platform facilitates systematic engineering, intent detection, and knowledge generation, enabling the team to identify areas for improvement and continuously enhance the capabilities of Tableau Agent.

The end-to-end architecture of Tableau Agent, formerly Einstein Copilot for Tableau.

Can you explain the collaboration between the Tableau Agent team and the Salesforce AI Research team?

The collaboration is key for tackling the tough technical challenge of improving Tableau Agent's question relevance and response quality. To address this, the team collects questions that may be unrelated to the user’s query. These problematic questions are then forwarded to the AI Research team, who use the data to build models that would help improve the quality of Tableau Agent's responses over time.

One example of collaboration involved intent classification. While Tableau Agent's software could support multiple intents, there was still an error rate of around 20% in accurately classifying user intent. To handle this, our team collected feedback data, including user feedback and test cases, and shared it with the AI Research team, who then built a smaller, fine-tuned language model specifically for intent classification, aiming to improve accuracy and reduce the error rate.

How do you approach AI testing and quality assurance for Tableau Agent?

As mentioned, we have developed a benchmarking system that we continuously build upon. This asset covers various domains and industry data, allowing us to thoroughly test different scenarios and ensure high coverage.

Next, we leverage the AI capabilities of Tableau Agent itself for testing. We use one model to generate answers and then employ another one to grade them. This cross-testing approach helps us validate the accuracy and reliability of the AI-generated responses.

Additionally, customer feedback and vendor labeling data are utilized to optimize Tableau Agent's models and enhance its out-of-the-box capabilities.

By combining these approaches, we ensure accurate results and maintain the highest standards of quality.

Learn More

- Read the Tableau Agent news announcement

- See Tableau Agent in action

- Stay connected—join the Salesforce and Tableau Engineering Talent Community!

- Check out our Technology and Product teams to learn how you can get involved.

Relaterade berättelser

Subscribe to our blog

Få de senaste Tableau-uppdateringarna i din inbox.