3 data governance considerations for deploying NLP in the enterprise

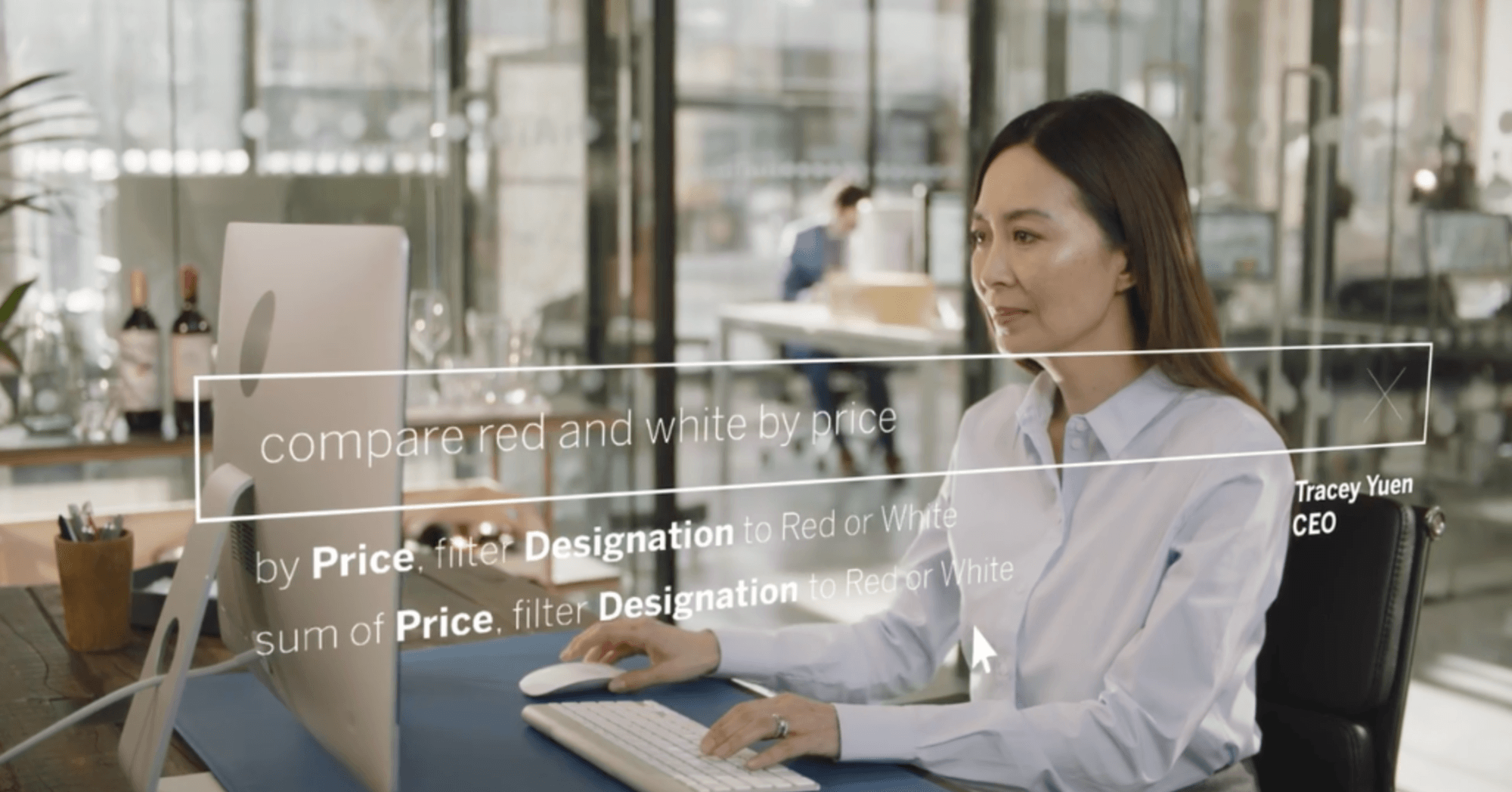

There is a lot of buzz around natural language and more business intelligence (BI) vendors are adding a natural language interface so that users can ask questions by simply conversing with their data. This opens up a lot of powerful possibilities for people of all skill sets, but what does this mean for data governance in your organization?

Natural language processing (NLP) expands self-service BI by lowering the barrier to analytics and providing a new way to interact with data. It’s important to have clear guidelines around people and processes so you can take advantage of all of the benefits of natural language, while maintaining a secure, governed environment. When NLP is part of the analytics platform, the data governance principles you’ve defined should extend to this new type of interaction with some additional considerations.

If you are considering deploying NLP within your organization, review these data governance processes to get started on the right foot.

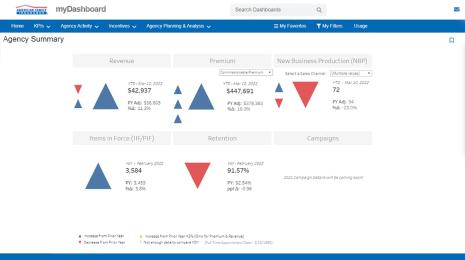

1. Data source management supports self-service NLP access

Data source management refers to the processes related to the selection and distribution of data within your organization. As natural language becomes more common within self-service analytics deployments, it’s even more critical that an organization has a selection of relevant, certified, and maintained data sources for people to discover, access, and analyze. Define the process to select use cases among the audiences for sources of data and prioritize the curation of these data sources by impact and audience size.

A hallmark of good data management is the balance between people and process. NLP systems typically inherit the permissions assigned to a data source, so it’s even more important to make sure that the right people have access to those sources. A dedicated data steward (or team of stewards) should create policies that empower people to find the right data for their work while minimizing data source proliferation. Answering questions through a natural language system relies on this structure. To ensure that people can answer their questions, they must understand how the existing data sources relate to those questions.

2. Metadata management helps people get the answers they need

Metadata management is an extension of data source management and includes policies and processes that ensure information can be accessed, shared, analyzed and maintained across the organization. Metadata adds business context to data to make it easier for people to analyze. When looking at your business data, it’s wise to ask whether or not the fields in your curated data sources are immediately understandable to the average business user.

To get the most out of natural language capabilities for BI platforms, it is important to anticipate the types of questions that your users will ask of the data, just like when you’re building a dashboard. As a data steward, once you’ve identified these questions, you may need to reshape your data, adding join operations or related data prep functions to get the data in the right shape for analysis so it is ready for NLP users to consume. More clarity in the metadata model means that NLP users can receive the right answer from the NLP system. These efforts will also extend to everyone leveraging your self-service analytics environment, not just NLP users.

To start, work these steps into your metadata management:

- Filter and size to the analysis at hand

- Use standard, user-friendly naming conventions

- Add field name synonyms

- Create hierarchies (drill paths)

- Set data types

- Apply formatting (dates, numbers)

- Set fiscal year start date, if applicable

- Add new calculations

- Remove duplicate or test calculations

- Enter field descriptions as comments

- Aggregate to highest level

- Hide unused fields

3. Data quality establishes trust in information

Data quality is a measure of data's fitness to serve its purpose in a given context—in this case, for making business decisions—and high-quality data is critical in order to take advantage of the benefits of NLP. Messy data can be a costly problem. Data that is poorly structured, full of inaccuracies, or just plain incomplete can have big implications on self-service analysis, and that includes NLP. NLP enables a broader group of users with a range of analytical skills to interact with data, and therefore, data quality is a must so that users can trust the results returned by the NLP system. If you plan to curate a data source for a NLP system, it is a good idea to focus on a set of fields that are relevant to your audiences’ business questions. This helps with overall trust in the data, which supports your self-service model—and most importantly, it means that people feel confident making decisions.

Reviewing these considerations will help you get a good start to a successful NLP deployment across users of all skill sets. See for yourself by trying Ask Data, Tableau’s NLP capability, which allows people to get insights by simply conversing with their data.

For more information about curating data for Ask Data, read this whitepaper, Preparing data for natural language interaction in Ask Data.

Verwante verhalen

Subscribe to our blog

Ontvang de nieuwste updates van Tableau in je inbox.