Don’t sweep dirty data under the rug: A three-part approach to data prep

Editor's note: Today’s blog post comes from Gordon Strodel, Information Management & Analytics Consultant at Slalom. Slalom is a consulting firm that helps companies solve business problems and build for the future.

Watch the webinar, Dirty data is costing you: 4 ways to tackle common data prep issues to hear Gordon, Nationwide's Jason Harmer, and Tableau's Andy Cotgreave discuss actionable ways to overcome data prep issues.

There’s an old analogy about an explosion in a junkyard resulting in a Cadillac. It just doesn’t happen. In the same way, clean, well-structured data doesn’t just appear in an organization’s lap. It takes care, planning, and attention to detail. Bad data can appear for a variety of reasons: it’s caused, it’s designed, it’s created with the worst or best of intentions, or it's created with NO intentions.

Cleaning up dirty data can be frustrating—and on an organizational level, dealing with the root cause of dirty data is often just as messy. At Slalom, we like building solutions to problems. For the Information Management and Analytics team that I'm on, those problems often relate to data.

When we try to solve problems for our clients—including those related to dirty data—we try to take a three-part approach.

1. Technology: Find the right tool for the job

Technology is like the tools you use to fix your car. You need to determine the best one for the job, situation, and timeframe. You’re also influenced by your resources, including your training and your supplies. Why use a wrench when a socket set would be more efficient? And just like you can use multiple tools for multiple applications, there is generally only one or two recommended tools for a specific job. You can dig a hole with a spoon, a backhoe, and a nuclear bomb, but one of those is more ideal than the others.

2. People: Serve the people who are using the tool(s)

The people side of the equation is harder to measure, but it is equally important as the other two areas. Continuing the analogy, your people will drive the car. Consider who is using the tool(s), the training they’ll need, and the factors that will determine sustained success with the initiative. In order to transition effectively, you need to find a way to measure the transition, so you can leverage that information to encourage others and tell the success story. A new car will change the way you drive—and in the same way, new technology often means that people have to change the way they work. Having an agreed upon unit of measure will ensure that everyone is getting the attention they need as part of the change.

We find that companies often have long consideration processes when deciding which technology to buy and how to build a process to keep it stable. But sometimes, these companies don’t think about the people who must support the technology including the training they’ll need to be successful with the technology.

As Dow Chemical pointed out a few years ago in their advertisement series on the Human Element, only in the realm of the influence of people does the chemistry occur. When working with bits and blocks of data, you need to understand the people using the data to fully understand its context. And in the same way, understanding HOW and WHY the data got there stems from an understanding of WHO put it there. Data prep is ultimately for people, to make their lives easier; let’s not lose sight of that fact.

3. Process: Build for success, support, and sustainability

If technology is the automotive tool, and people the driver, then the process is the mechanic and the service manual. This is the "glue" that allows the three areas to work together to help achieve the project's goal. Technology without good process and trained people gives you more problems. Good people with bad technology gives you different issues. By considering the process, you build the success, the support, and the sustainability for your people and their technology. Establish governance standards and frameworks to be successful, but strike a balance that ensures that the driver can get to where they need to go.

Applying the framework to the data

How do we apply this Technology-People-Process framework to data cleaning and preparation? Well, while the framework is simple, the execution is nuanced. Here's my advice:

Find your tool: Discover the tools that help make your job more efficient, easier, repeatable, and measurable.

Build a light, yet robust process to regularly review data, flag issues, close issues, and measure progress: Many of our clients call this a "Center of Excellence" model. Others call it a "working group." If you don’t regularly review existing data for issues, you risk creating more bad data in your attempt to fix other issues!

Invest in training and development: Invest in time to train all users on the importance of data quality. Leverage like-minded people in your company with subject-matter expertise and a passion for data. They might just be the Tableau analyst sitting next to you.

Automate when you can: Once you have your tools and process in place, and your people are trained, work to automate everything. You might find certain issues cannot be cleaned up in the source, but there is a very simple set of rules to fix them. In this instance, work with your database administrators to schedule a cron-job to clean up the data automatically, so you don’t constantly need to account for this issue in your workflow. Alternatively, you could look at using predictive models or build other logic into your system to regularly adjust data issues.

Determine the root cause of the problem: If you're a doctor and your patient has a broken arm and is bleeding out, what do you fix first? First stop the bleeding, then deal with the arm. Similarly, with data problems, determine the root causes of bad data and stop them from occurring. Then go back and conduct a retrospective clean-up of any historic data issues. Keep in mind, many of the fixes could be people or process related, not technology!

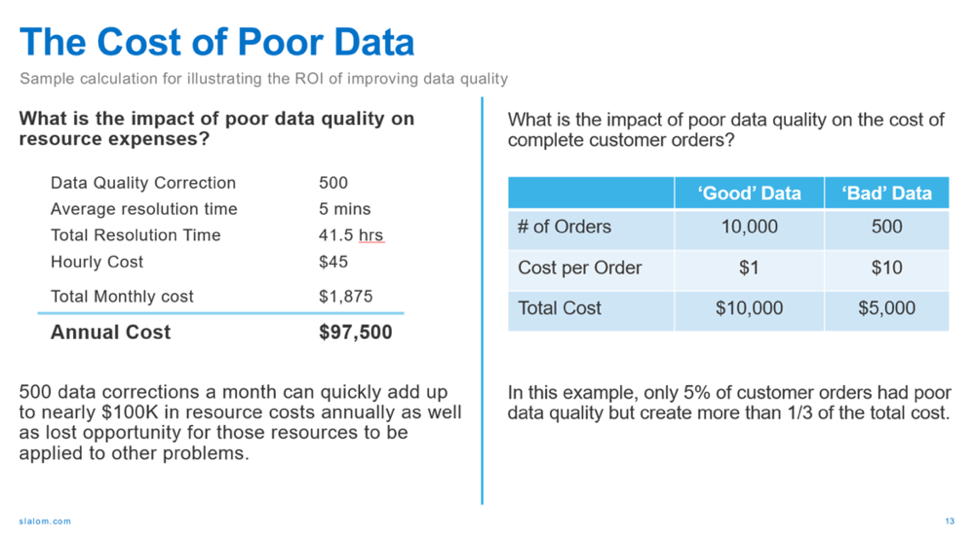

Tell a story of the impact of bad data: Here at Slalom, we have a slide to illustrate the cost of dirty data, see below. *Hint*—it's not cheap, especially if you have someone cleaning the data manually. This story can also help in the quest to secure automation or additional technology resources to save time.

Slide courtesy of Slalom

An excuse to be [Tableau] Prep(y)

From my perspective, Tableau Prep is one of those pieces of technology that begins to address all three areas of our framework: technology, people, and process. As a piece of technology, Tableau Prep is radically easy to use and some might say even a pleasure to work with! It has the tools you need, the community to support you, and the native ability to play in the Tableau ecosystem.

For process, Tableau Prep easily fits into the Tableau analyst's tool-belt—right between Tableau Desktop and your source data. It makes regular data routines easy to run, build, and re-run later. Tableau Prep gives your data analyst or subject matter experts the right tools to identify and flag data issues for the data quality working group.

From a people perspective, it's clear that Tableau has designed Prep with actual humans in mind. It's easy to use, pretty to look at, and communicates well. And did I mention it’s intuitive? If you’re already familiar with data prep, you really don't need a whole lot of additional training to learn it. And if you’re new to data prep, there’s free training resources that you can utilize.

Learn more about cleaning up dirty data

Read this article on how to solve common data preparation issues. And join us for a webinar on how organizations can overcome common data prep struggles. We hope to see you there!

Autres sujets pertinents

Abonnez-vous à notre blog

Recevez toute l'actualité de Tableau.