User Ex Machina: Simulation as a Design Probe in Human-in-the-Loop Text Analytics

This is a blog post was co-written by Anamaria Crisan and Michael Correll, and is associated with our upcoming paper for CHI 2021, “User Ex Machina: Simulation as Design Probe in Human-in-the-Loop Text Analytics.” For more information, read the paper!

People increasingly rely on machine learning (ML) models to help them organize and make decisions with their data. Collections of texts are no exception: it would be daunting and labor-intensive for a person to read through hundreds or thousands of text documents and make sense of them all. One ML solution is to group documents together into topics. These topic models are regularly used to summarize, cluster, or otherwise organize and describe large numbers of documents.

When topic models work, they are great: you can track what people are talking about in different documents, filter out irrelevant documents, and quickly identify important themes. However, when these models fail, it can be difficult for people to intervene to correct or refine the model’s output. Sometimes there can also be a mismatch between the amount a refinement a person wants to perform and the way this machine learning model reacts to this intervention: a minor adjustment can suddenly result in a totally different set of topics, for example. So we might want to mess with a topic model, but there’s the risk that what we’d try to do might make things worse. As per machine translation pioneer Frederick Jelinek: “every time I fire a linguist, the performance of the speech recognizer goes up” — there’s always a danger whenever we start poking our noses in big complicated things without understanding how they work. Our work was an attempt to measure how the kinds of fine tuning that we might want to do with topic models could impact the results.

Adding Humans In The Loop

Humans structure the data that are collected for machine learning (we sometimes are the data that are collected) and determine how–and whether–machine learning models impact the ultimate decision-making process. But what is less clear is how people should intervene in the precise shaping and building of models in the flow of analysis, what is commonly called “human-in-the-loop machine learning” (“HILML”). On the one hand, we as humans bring important semantic context and oversight to models: unsupervised, there’s no telling what sort of brittle, biased, or nonsensical outcomes might result. On the other hand, the complex and occasionally counter-intuitive nature of machine learning models means that our attempts to improve performance can backfire: we should be wary about sticking our hands into complex machinery while it’s running, and code is no exception to that rule. Text analytics is an important acid test for HILML thinking: human beings have rich and complex connections with language, and machine learning models of text often struggle to capture that richness.

Measuring Model Impact

Our work looks at how proposed HILML actions impact text models, using topic modeling as our initial test bed. What we did is create a pipeline for simulating actions on a topic model. Texts go in, a model goes out, and somewhere in the middle (either early on, adjusting how the texts are prepared or cleaned for the topic model, or later on, adjusting the topics that came out of the process), a simulated user takes a simulated action. We then measured how big of a splash this impact made. This measurement can take a lot of forms, and so we employed a variety of metrics about the topics themselves, their relationship to ground-truth themes in the texts, the stability and coherency of the resulting thematic clusters of documents, and even how these changes were (or were not) visible in common topic model visualization techniques.

What we found was somewhat surprising: many of the proposed HILML systems for text that we saw involved a user interacting with a model after it has been generated (say, “this word doesn’t look like it belongs to this topic, let me filter it out” or “hmm, these topics seem like they are connected, let me merge them together”). But where most of the “action” happened in our simulation, in terms of making big changes to the data, were choices in how to clean and prepare the texts, before the topic modeling was even performed! These preparatory actions are often not exposed to users of HILML systems, set to smart defaults, or are fully automated in an unsupervised way. Our work suggests that these preparatory actions might deserve more consideration: if the system has poor performance, the input data is likely to be a larger factor than the output topics: HILML in that case might just be rearranging deck chairs on the textual Titanic.

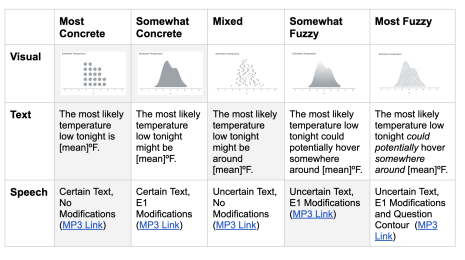

Another concerning finding was that it was possible to take an action, even an action that we’d expect to be drastic, and have almost nothing change in the resulting visualization of the model. For instance, changing the number of topics to generate is often thought of as a particularly disruptive action, but it only rarely resulted in prominent changes in our model. Since topic models are such complex things, and the document collections they are applied to are often massive and complex themselves, existing visualizations often only show a subset of the most popular or most informative topics and texts. Which means that if an action only changes things about less popular topics, it can appear to the user that nothing at all is different under the hood.

It’s these sorts of mismatches between the intended scale of the impact of the user interaction and the actual impact on the model where we think the biggest challenges and opportunities for HILML lie: a model that is completely different from the one we started with is probably fine, from a user experience standpoint, if we set out to make big, disruptive changes. But if we just wanted to adjust a hyperparameter or two and the metaphorical ground shifts out from under us, then we’re in trouble.

Building Better Human-in-the-Loop Systems for Machine Learning

Our findings led us to a set of three recommendations for designers of future HILML systems:

- Surface provenance and data cleaning operations to the user (if they are where most of the important changes can happen, then users should know about what happened!)

- Alert users to actions that are disruptive, since our intuitions might not be good guides for what will or won’t cause big changes to our model.

- Guide users to fruitful actions or states. Our simulations showed that variability in accuracy and model quality can occur even for minor actions. Rather than force the user to blindly mess around until they find a good spot to be in, perhaps the system can be a little proactive and so some simulation or exploring on its own.

We also think that there’s plenty more to do from the research side as well. Can the ML model learn about the user while it’s learning about the data and try to anticipate potential next actions? What sort of things do users even want to do to models, anyway, and what are the best ways of expressing (in terms of user interface or user experience) or fulfilling (in terms of model corrections or adjustments) those requests? In general, using what we’ve learned from decades of human computer interaction research, how can we design interactions with ML models in a more human-centered way?

There’s plenty more information in the paper. And if you’re interested in trying out (or extending!) our simulation pipeline, we’ve got code for that too!

Related Stories

Subscribe to our blog

Get the latest Tableau updates in your inbox.