MyALPO: How we built the search functionality

Note: This is the last installment of a three-part series on MyALPO, an internal tool that provides a personalized homepage for every Tableau Server user. The first part outlines how we designed MyALPO, and the second part details technical lessons learned.

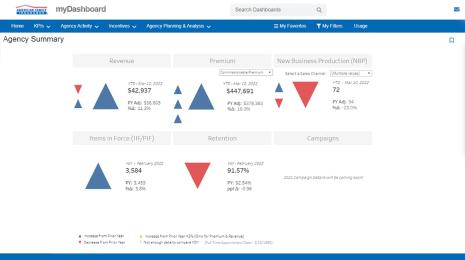

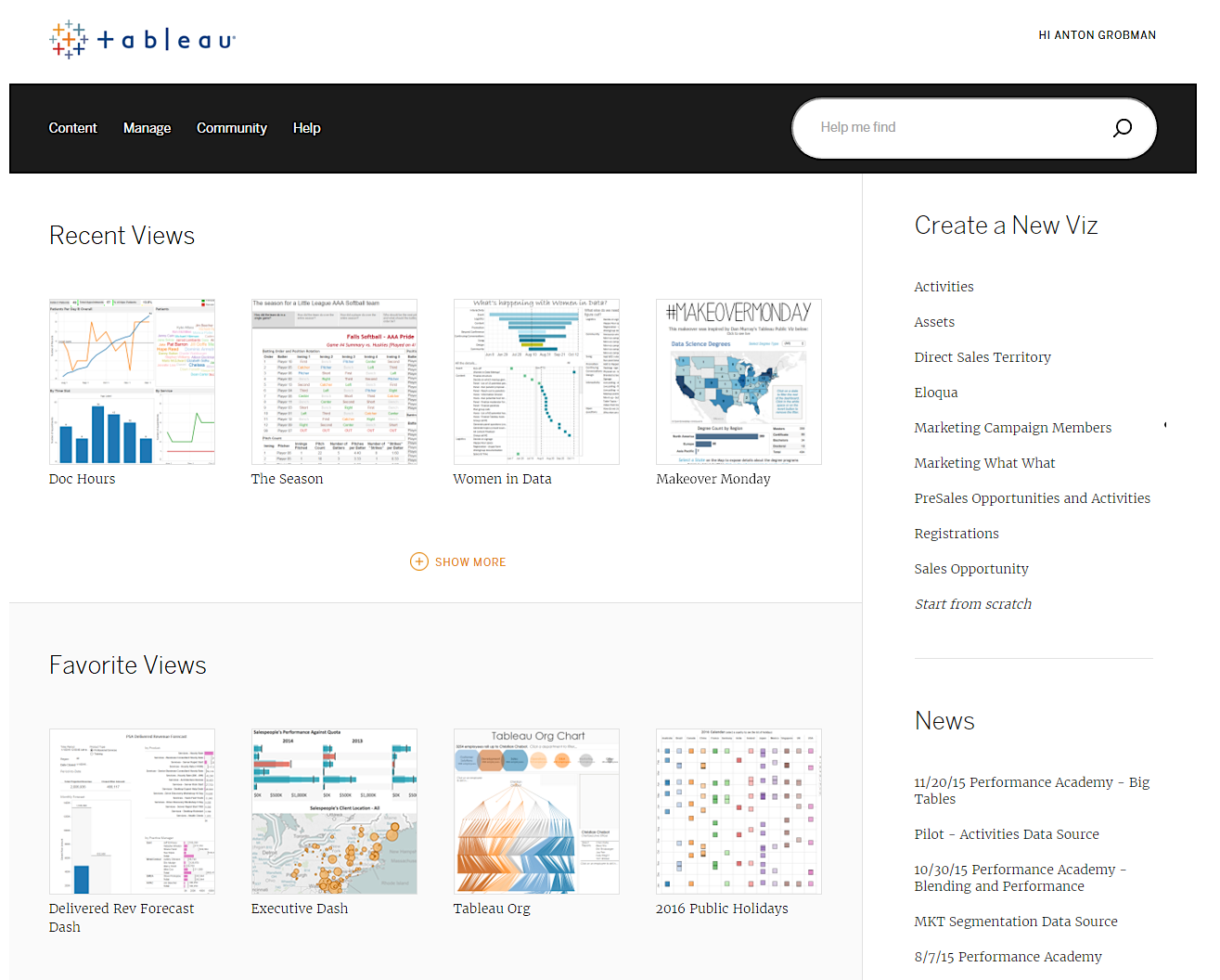

When we were building MyALPO, our internal portal to Tableau Server, we knew we wanted to include a search functionality.

User research showed people relied heavily on search to find content on Tableau Server. So we knew that if MyALPO didn’t include this capability, it wouldn’t suffice as a first-stop portal. We wanted people to start with MyALPO and use it to find relevant content on Tableau Server.

But it turns out search is a pretty complicated thing to build. When sat down to build the functionality, we had more questions than direction. We weren’t quite sure how to approach the problem, and we couldn’t find any resources on the web to help guide us.

We did have one important tool at our disposal: access to Tableau developers. Working with those who know our product best, we managed to build a powerful search tool. In the process, we learned many lessons—some of them the hard way. So we thought we’d share them with you and save you the trouble.

Logging in to the webclient API

We had to mimic the search calls on Tableau Server and get the same exact results. But due to security restrictions, we had to build this on a server external to the hardware that Tableau Server runs on.

If you get logs of the network traffic (using Fiddler, for example) of a user performing searches on the Tableau Server front end, you can see that it makes five calls to a vizportal API. Internally, we call this the webclient API. Before we can make these calls in our portal, we have to log in to the webclient API.

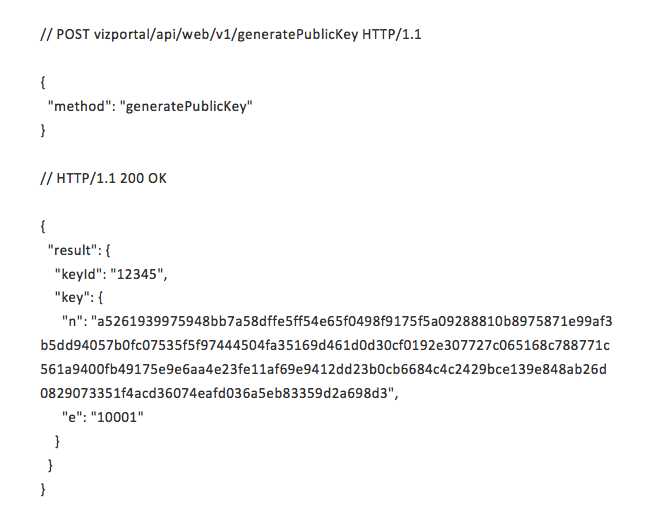

To do this, you first need to make a request using the Generate Public Key call. An example of this call looks like this:

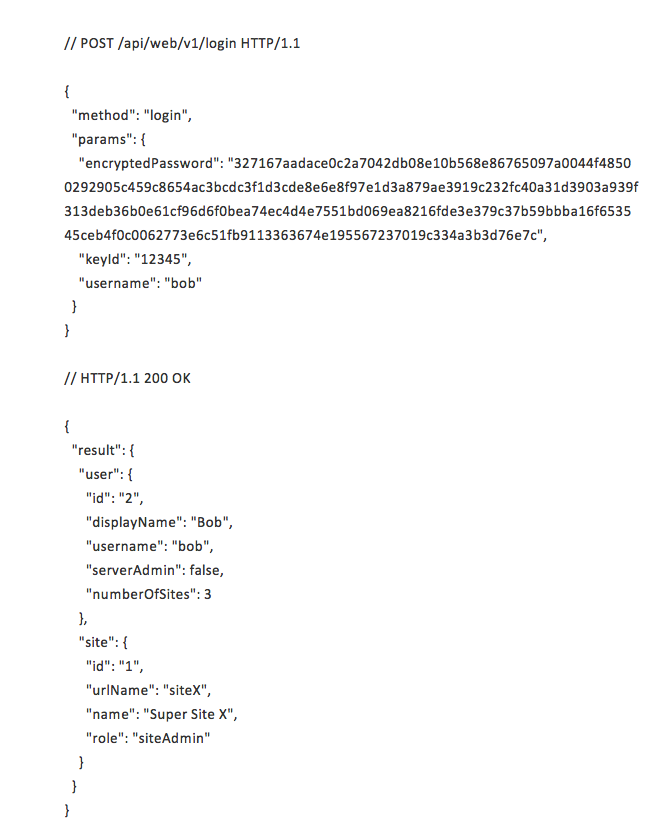

This response from Tableau Server is then used to encrypt the user’s password. Using the result’s modulus, “n,” and exponent, “e,” you should use the CRYPT_RSA_ENCRYPTION_PKCS1 encryption mode to encrypt the user’s password in the JavaScript. This encrypted password is then passed into the login API call as part of the body. An example of the login call looks like this:

Along with the response body, you also get two cookies you need to hold onto: the workgroup_session_id and the XSRF-TOKEN. Because these calls do not pass any cookies, they can be made external to the Tableau Server. (The webclient API also has the availability for Kerberos and SSPI logins, but I will not go into that here.)

Making five calls to the API

To replicate the search functionality, we need to make five calls to the API. Those calls are to query views, workbooks, data sources, users, and projects. All five calls are similar and relatively simple.

All of the specifics for the calls can be reverse-engineered from a Fiddler log. What makes these calls difficult to make from an external server is that when you try to pass the session id and token as cookies in the POST call, you hit cross-site scripting restrictions.

Theoretically you can turn on cross-site scripting on your Tableau Server, but that poses serious security risks. So we can instead create a proxy on Tableau Server, through a Web Data Connector, to make our POST calls. Tableau Zen Master Tamás Földi explains how to do this perfectly in his blog post “The Big CORS Debate: Tableau Server and External AJAX Calls.” We’ve made a few additions to Tamás’s method which I’ll explain.

To start this process, we need to capture what the user typed in a textbox, construct the POST request as a string, and include the specific workgroup_session_id and the XSRF-TOKEN. Pass that string to the WDC proxy through an iframe on your site.

On the WDC proxy side, make sure you validate that the string is coming from a known source and that the content is not malicious. Then parse the string, extracting the session id and token values. Create cookies with those two values, and make sure the cookie expiration is the same as the client’s API response. Pass the rest of the call to Tableau Server as you see in your Fiddler log. After the call has been successfully made, delete the two cookies on the Tableau Server side.

Take the response and return it back to your site through the iframe. On your portal site, validate that the source and content are not malicious and, using JavaScript, parse the response back to fill your search results.

Matching calls and responses

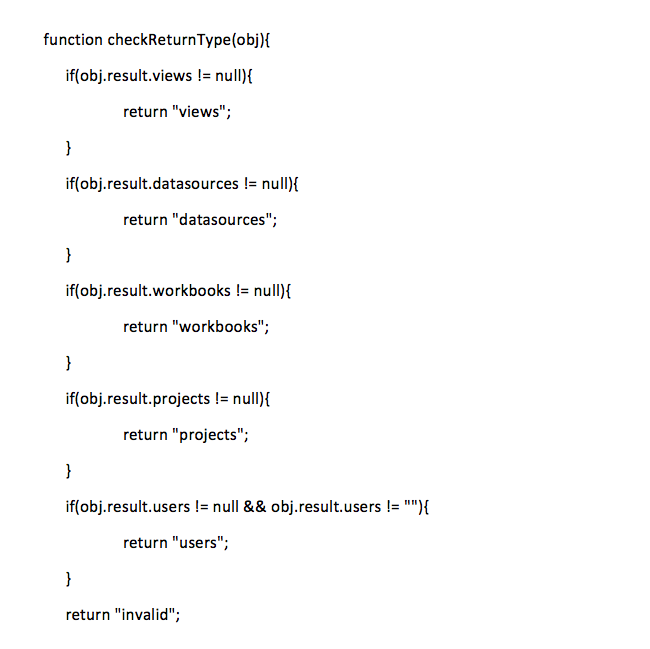

When making five calls at the same time from an external server, it’s not clear which response came from which call. But we noticed that the five responses are not identical. If you check them in the proper order, you will always have a perfect match of call and response. Here is our response-identifying function:

From here you should be able to identify your response and pull the appropriate values out of the JSON to populate your search results.

Dealing with growth: What’s working? What's not?

Once you try building your own internal tool, send us your feedback. Let us know which parts have proven useful and which parts need improvement. Or, if you’ve implemented a solution of your own, do share with us what you’ve learned.

Learn more about building a culture of self-service analytics

Data Diaries: A Hiring Guide for Building a Data-Driven Culture

Data Diaries: How VMware Built a Community around Analytics

Data Diaries: Defining a Culture of Self-Service Analytics

Building a Culture of Self-Service Analytics? Start with Data Sources

In a Culture of Self-Service Analytics, Enablement Is Crucial

Boost Your Culture of Self-Service Analytics with One-on-One Support

Related Stories

Subscribe to our blog

Get the latest Tableau updates in your inbox.